Technology is transforming our society. Since the dawn of humanity, approximately 2 to 3 million years ago, technology has been the driver for bringing about revolutionary changes to humanity. From the necessary stone tools of the Stone Age, the invention of the Steam Engine in the 17th Century, the Radio in the late 1800s to the conceptualization of the first computer by the creation of the first transistor in the Bell laboratories in 1947, technology was synonymous with change & progress with humanity progressing in tandem.

The invention of the computer was a landmark achievement for the scientific community. Subsequent advancements then took the simple computational machine to astounding heights. Today, we will look into one of the most significant technological trends of the 21st Century: sending ripples across the sectors, Artificial Intelligence.

So, without further ado, let’s start exploring.

A Look At Artificial Intelligence & Its Branches

Can a machine think on its own? Can a machine replicate everything that a human brain does? These intriguing questions have kept many up at night.

Our inherent inquisitiveness has always looked for ways to designing intelligent machines, and the invention of programmable computers was a significant boost in that direction. Gottfried Leibniz, George Boole, Alan Turing, Alonzo Church, David Hilbert were great thinkers who contemplated, studied and published works on automata, formal reasoning and mathematical logic, the groundwork of AI.

Mathematics, statistics, computer programming and robust analog integrated circuits have now made it possible for automata to replicate formal reasoning, within certain limits. Simultaneously, the groundwork and the ideas behind AI have been conceptualized & even implemented to some extent in the last Century. It is only in the second decade of the new millennium that we witness considerable advancements in the field.

Artificial Intelligence is a vast field with applications across different domains; from healthcare, manufacturing, HR, sports, and more. Is AI this generation’s biggest innovation? How much does it really influence our daily lives? Massive data processing facilities and hardware are now allowing scientists and engineers to design AI models capable of carrying out a vast number of intelligent tasks, mimicking human logic & reasoning. Almost every software education or technological course or program today dwells into AI in one way or the other. Renowned colleges such as Stanford, Harvard, MIT, UC Berkeley, ANU, etc. have pupils develop their own basic AI model and design neural networks for their software engineering assignments.

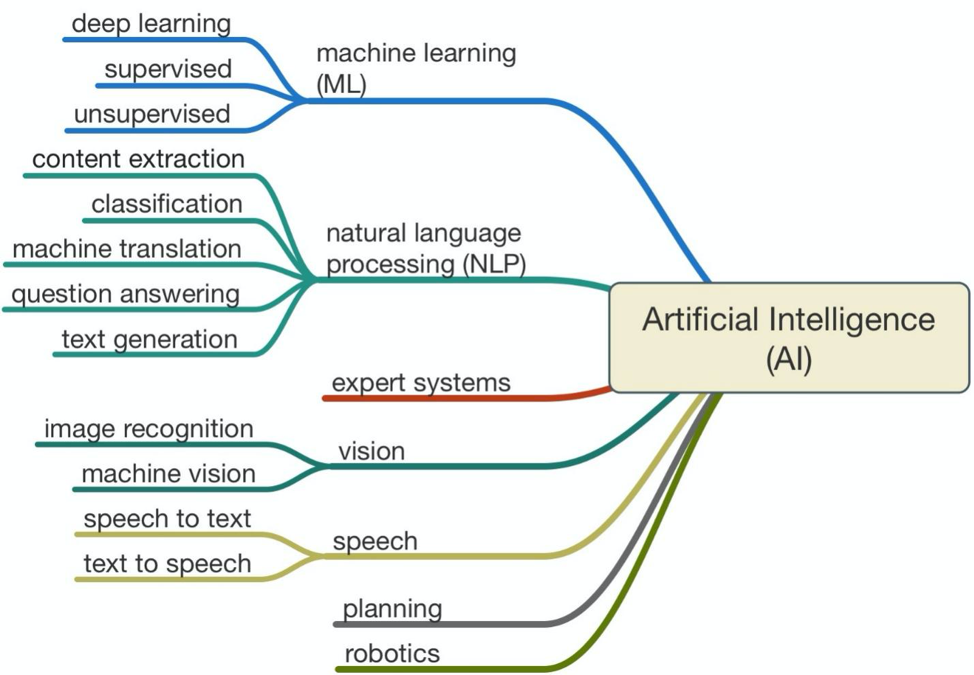

The following subject areas are of considerable interest to AI, as they involve AI models to operate and behave akin to natural intelligence. These areas of interests or disciplinary branches are some of the field’s key concepts or topics, each having its specific application areas.

Fuzzy Logic & Logical AI

The late Professor John McCarthy, one of the founding fathers of AI stated, “Everything a program knows about its environment & the world, the tasks it needs to perform and the steps to achieving the correct outcomes, are represented by some mathematical, logical language.”

This study looks into AI systems where the program needs to infer the actions necessary to achieve a result.

Search

AI programs examine numerous possibilities and probabilities with increasing efficiency to search for the best approaches for a particular process. Game playing, pathfinding and constraint-satisfaction problems involve requiring the application of AI search algorithms.

Perception and Pattern Recognition

Pattern recognition algorithms find widespread applications in computer visions and image processing task. Feature detection and template matching are two of the critical underlying processes in AI pattern recognition.

Representation

AI systems use mathematical logic to represent information and knowledge about the world.

Inference & Common Sense reasoning

Statistical inference, mathematical & logical deduction and non-monotonic logic focus on AI systems involved in inferring and reasoning.

Systems involving non-monotonic and commonsense reasoning are concerned with the simulation of human presumptions, folk psychology & physics and one of the most challenging aspects in AI. Commonsense reasoning is essential to achieving human-level performance in different kinds of tasks.

Learning

Machine learning, deep learning and artificial neural networks are the most trending aspects in AQI today. Learning from training and experience is critical for AI programs, but information representation limitations are a significant constraint.

Epistemology and Ontology

Studying the different kinds of knowledge and the things that exist are an essential part of AI models. Systems need to deal with different inputs & parameters and have to be well-aware of their properties.

Heuristics

Heuristic classification is a major application of AI systems. ‘Expert systems’ solve different kinds of problems by relating problem data with a ready set of solutions to devise problem-solving strategies, with an acceptable range of accuracy.

Implementations of the above concepts/branches of AI and subsequent advancements have found numerous applications in technology. AI is an evolving discipline, and research & developments are a central aspect of the field. Classifying and segregating AI is an arduous and extremely challenging task. With so many different techniques, methodologies, concepts and applications, it is an expansive and intricate field and various aspects overlapping each other.

Let’s now take a look at some of the most astounding developments and breakthroughs in the field of AI that are ushering in a new age of technology.

Remarkable AI Advancements In Recent Times

The following is a shortlist of some astounding breakthroughs in AI expected to bring about sweeping changes across industries and economies.

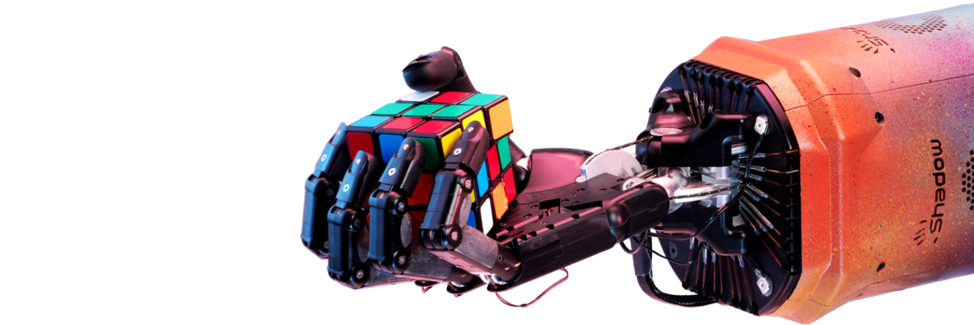

- Dexterous Robot Hand

Open AI, a leading AI research company, has amalgamated two of the most prominent AI branches, neural networks and robotics, to design a robotic hand, code name ‘ Dactyl’ capable of solving the Rubik’s cube on its own. The networks were trained in a simulation and utilized their learning to solve a complicated physical world problem.

Open AI used reinforcement learning, a type of machine learning that uses reinforcement theory to make machines learn & find the correct outcome and automatic domain randomization. This process generates progressively challenging environments for the model, to design neural networks & robotic devices capable of solving complicated real-world tasks in completely alien environments and with any parameters.

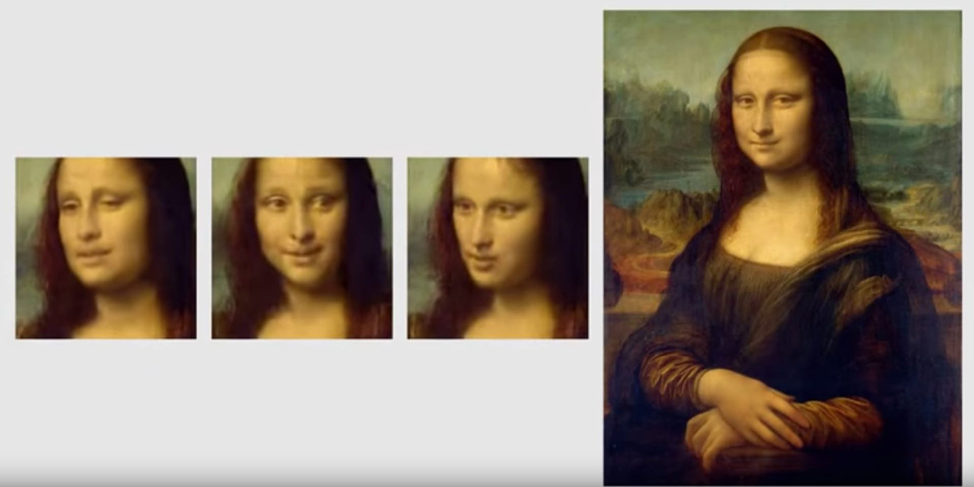

- Deepfake

Generative Adversarial Networks are artificial neural networks that generate data with features similar to that of its training data. Researchers at Samsung built a model based on this network that can transpose an image onto a video. Researchers use GANs to carry out high-fidelity image synthesis and allow ML models to create realistic human expressions.

- Transformers

Transformers are a format of neural network architectures, which lie the heart of natural language processing, language modelling and machine translation. The transformer architecture is much faster and performs with much more potency than both recurrent & convolutional networks. The transformer utilizes a self-attention system to determine the meaning and context of some content.

- AI-Generated Synthetic Text

Generative Pre-Training models are transformer-based natural language processing architectures, capable of generating text automatically. Utilizing deep learning, Open AI designed an NLP model that can generate synthetic text after scanning & understanding the subject & context of a given topic or sentence. Amidst ethical concerns, numerous online article spinners & automatic essay writing utilities employ this model.

- AI-enabled Chips

Hardware limitations are probably one of the most significant constraints in the field of AI. Even the most potent CPUs of today, for example, AMD Ryzen 9 5950x or Intel Core i9-10900K, can’t handle the resource-hogging nature of AI data processing. This is why GPUs are used to provide additional processing power to the CPUs during training & processing large volumes of structured or unstructured data.

AI-enabled processors are the way around such hardware hurdles. These ‘intelligent’ chips employ a neuromorphic architecture to optimize tasks and improve performance efficiency.

- AI supplemented workforce

Given the increasing influence and capabilities of AI, the idea of a hybrid workforce is nothing alien. AI, in the form of robotics, is already central to many industries. With the advent of learning models and vision systems, AI is expected not just to assist but work closely with human beings to achieve a goal and optimal performance.

Organizations across sectors are incorporating AI technologies such as cognitive AI & robotic process automation. AI-powered tools, bots and even robotic workers will become an irreplaceable part of the future.

And, with that, we draw this article to an end. Hope it was able to deliver some useful information about one of the most significant advancements in technology and its many implications on humanity.