Introduction:

With the increasing intelligence and computer vision technology, people cannot resist asking what allowsautonomous vehicles to turn self-driving cars into a reality. In the digital era of machine learning and artificial intelligence, the designing and development of self-driving cars are one hot topic worldwide. The only thing that helps the world to understand autonomous machines is data annotation. An autonomous vehicle needs to have some skills for handling complex scenes, this is where artificial intelligence plays its role in autonomous driving.

Let’s develop an understanding of data annotation and what makes self-driving cars a reality.

What is data annotation?

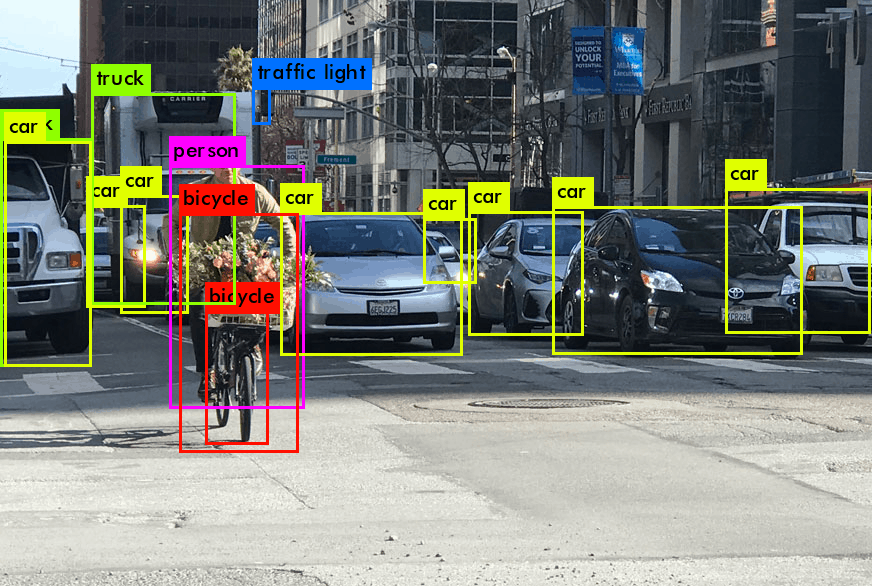

Data annotation is the process of labeling data about a particular object/interest using video, image, text, and speech. It is used in machine learning to prepare raw datasets by using various processes. Data labeling is to make connections so that it can be recognized by different models of machine learning. Higher accuracy of data annotation is important for better performance of machine algorithms and the safety of the public.

Depending on the type of data, data annotation can be of different types:

- Image annotation – tagging of a text document with concepts

- Video annotation–labeling of video clips for the detection of objects

- Text Categorization –Creating labels and associating them to a digital file

- Semantic annotation – tagging of a text document with concepts

- Audio annotation – Record speech or audio in an understandable format for the machine through machine learning

- LiDAR – analysis of timespan and measurement of distance with x, y, and z-axis coordination

How do self-driving cars work? How does it see the road?

Moving a self-driving car to reality requires quality labeled data. Refined, scalable, and high-quality data is important for the practicability, navigation at high speed, and safety of self-driving cars.

There are three sensors in an autonomous vehicle i.e., cameras, radar, and LiDAR which are used by self-driving cars and function like the human brain and eyes.

In self-driving cars, cameras are set at every possible angle for a clear view at 120o. For extensive visuals such as parking lots, fish-eye cameras are most appropriate.

When a camera fails to detect an object or experiences poor visibility, radio waves are released from radar for the detection of the object’s location and its speed.

The type of annotation that allows self-driving cars to see the road is LiDAR. The 360o rotation of LiDAR allows the car to see the road in all directions. It works by releasing light on an object which reflects the LiDAR to measure the distance. The production of a 3-dimensional Point Cloud allows the car to see the road.

All these sensors work together to produce raw data which are used for training the variables of neural networks and then deployed in self-driving cars. The learning involves the correlation between the steering angle and markings on the street.

Despite the installation of such sensors, self-driving cars are now developed with data annotationi.e.,inertial measurements which allows the automatic monitoring and control of the target location and desired speed of the car.